Fulbright Chronicles, Volume 4, Number 1 (2025)

Author

Mariofanna Milanova

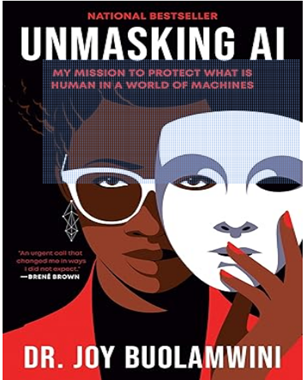

Unmasking AI: My Mission to Protect What is Human in a World of Machines by Joy Buolamwini who was a Fulbright Scholar in 2013 to Zambia.

As a Professor of Computer Science and Fulbright Scholar, I found Dr. Joy Buolamwini’s book Unmasking AI: My Mission to Protect What Is Human in a World of Machines both inspiring and thought-provoking. The book blends personalmemoir with a powerful call to action. Dr. Buolamwini shares her journey–as an African-American girl growing up in Mississippi and Tennessee, her early passion for technology and art, her experiences both as a Rhodes Scholar and a Fulbright Scholar, and finally her pioneering research at the Massachusetts Institute of Technology. It was at MIT that she exposed significant racial and gender biases in AI systems. A defining moment in her work was the discovery of the “coded gaze,” when facial recognition systems failed to detect her face unless she wore a white mask, revealing the deep-rooted biases embedded in these technologies. This experiment became the foundation for the 2020 documentary Coded Bias, directed by Shalini Kantayya (available on Netflix). The film features Dr. Buolamwini’s personal story and investigates how facial recognition systems frequently misidentify darker-skinned and female faces, raising critical concerns about algorithmic bias and its threat to civil liberties. Coded Bias underscores the urgent need for ethical and inclusive AI development.

Through her personal perspective,Dr. Buolamwini examines the broader impact of AI, demonstrating how these technologies can reinforce and amplify existing social inequalities. She introduces the term “excoded” to describe individuals who are marginalized or excluded as a result of biases embedded within technological systems.

Although this book first came out in 2023, the concept of Coded Bias remains highly relevant today, because, despite significant advancements in AI technology, modern AI systems continue to exhibit notable biases. Research has demonstrated that generative AI models—such as Midjourney, Stable Diffusion, and DALL·E 2—often reinforce gender and racial stereotypes, frequently portraying women and African Americans in stereotypical roles or underrepresenting them altogether. These biases contribute to the perpetuation of harmful narratives and can negatively shape public perception.

Buolamwini examines the broader impact of AI, demonstrating how these technologies can reinforce and amplify existing social inequalities.

AI technologies used in critical sectors like healthcare, criminal justice, and employment also continue to mirror and amplify existing societal inequalities. Facial recognition systems, for example, have been shown to produce higher error rates when analyzing darker-skinned individuals, particularly women, resulting in misidentifications and civil rights concerns.

Buolamwini adopts an intersectional lens to examine how multiple forms of discrimination—including racism, sexism, colorism, and ableism—intersect and are embedded within AI systems. She argues that these biases are not just technical shortcomings but reflect the perspectives and limitations of the people who design and implement these technologies. While there is growing awareness and an increasing number of resources addressing bias in AI, significant challenges remain. According to Buolamwini, addressing these biases is critical to building AI systems that are fair, equitable, and inclusive—ensuring that technology serves the needs of all members of society.

The author also outlines several key strategies for addressing algorithmic bias: conducting independent algorithmic audits, developing inclusive and representative datasets, establishing comprehensive AI regulations, and fostering a culture of continuous learning. These recommendations highlight the importance of a holistic approach to AI development—one rooted in ethics, inclusivity, and accountability. By implementing such practices, we can work toward creating AI systems that serve all of humanity fairly and equitably.

I was especially inspired by the final chapter, “Cups of Hope.” In this reflective section, Dr. Buolamwini emphasizes the importance of hope and perseverance, even in the face of overwhelming systemic challenges. The metaphor of “cups of hope” symbolizes small yet meaningful moments of optimism and collective action that sustain the ongoing fight for justice. This chapter stands in contrast to the harsh realities of algorithmic bias, offering a vision for transformative change. It encourages readers to stay committed to ethical AI advocacy, despite inevitable setbacks, and to find solidarity with others striving for a more just and inclusive technological future. Through “Cups of Hope,” Dr. Buolamwini reminds us that while the fight for algorithmic justice is difficult, it is not hopeless. Collective action, persistence, and shared optimism are essential to building AI systems that truly serve the needs of all people.

Towards that end, Unmasking AI is also a powerful call to action. Dr. Buolamwini founded the Algorithmic Justice League to address the harms caused by AI systems and to advocate for greater transparency and accountability. She invites readers from all backgrounds—not just those with technical expertise—to join the conversation about AI’s role in society and to push for policies that ensure these technologies benefit everyone, not just a privileged few.

Joy Boulamwini, Unmasking AI: My Mission to Protect What is Human in a World of Machines. New York: Random House, 2023. 336 pages. $17.00.

Biography

Mariofanna Milanova has been a professor in the Department of Computer Science at University of Arkansas Little Rock since 2001. She received a M.Sc. in Expert Systems and Artificial Intelligence and a Ph.D. in Engineering and Computer Science from the Technical University, Sofia, Bulgaria. She also conducted post-doctoral research in visual perception in Germany. She is an IEEE Senior Member, Fulbright Scholar, and NVIDIA Deep Learning Institute University Ambassador. Her work is supported by NSF, NIH, DARPA, DoD, Homeland Security, NATO, Nokia Bell Lab, NJ, USA and NOKIA, Finland. She has published more than 120 articles, over 53 journal papers, 35 book chapters, and numerous conference papers. She also has two patents. She can be reached at mgmilanova@ualr.edu.